I love fast content as much as anyone with a Shopify or WordPress store and a coffee habit. But the moment you switch from “handcrafted posts” to AI-assisted, 1-click publishing across your blog and social channels, the stakes change. You get speed and scale—and also a bigger blast radius when something goes wrong. ⏱️ 10-min read

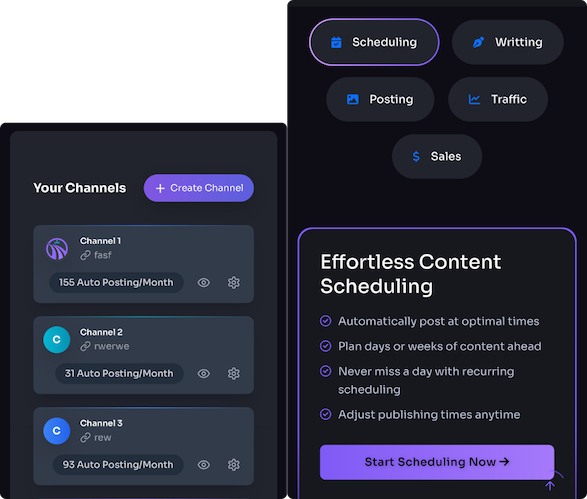

I’ve implemented AI content pipelines for teams using tools like Trafficontent, and the pattern is clear: the brands that win install practical, tech-first guardrails. The goal here is simple—publish at automation speed without torching legal compliance, brand trust, or search performance. Consider this your blueprint.

Map the risk landscape: what’s at stake when AI writes and publishes for you

AI can draft a blog in the time it takes to stir your oat milk, but it can also invent a product spec, borrow a copyrighted image, or “translate” a tagline into something your lawyer reads twice and then gently screams. When you auto-publish across Shopify, WordPress, and social, a single mistake propagates everywhere. Screenshots are forever; apologies are not.

Common crash points I see:

- Reputation damage from inaccuracies: wrong prices, misattributed quotes, or “AI confidence” on facts it clearly made up. Fixing it later doesn’t erase the shares.

- Legal landmines: missing FTC disclosures on affiliate links, DMCA takedowns for images you don’t own, ADA misses (no alt text), or privacy violations if you shove customer data into prompts.

- SEO fallout: spammy mass-publishing, thin content, or language variants that trip Google’s spam/auto-generated content policies. Translation ≠ localization.

- Privacy oops: multilingual posts that collect consent the wrong way or leak PII via UTM tags. “Hola, GDPR audit.”

Example: a multilingual auto-post touting “clinically proven” skincare in France without the required substantiation or disclaimer isn’t just awkward—it’s noncompliant. That’s why we add guardrails. It’s the difference between cruise control and nap-at-the-wheel. Funny once, expensive always.

Laws, platform rules, and industry standards to follow

Here’s your legal pre-flight check. It’s not law school—just the stuff that keeps you out of the spicy email thread.

- GDPR/CCPA data rules: lawful basis for processing, data minimization, consent for tracking, and data subject rights. Checkpoints: consent banner logs, data processing agreements with vendors, records of processing, and no PII in prompts. If you capture analytics, set retention windows and honor opt-outs.

- FTC endorsement and ad disclosures: be unmissable with #ad/#sponsored on social and clear affiliate disclosures on-site. Checkpoints: disclosures at the start of captions, no “#sp” ambiguity, and keep a record of comps/affiliations. Reference: FTC Endorsement Guides.

- COPPA (kids’ content): if under-13 users are in play, no personal data without parental consent. Checkpoints: age-gating or a hard “no collection.”

- CAN-SPAM: for email automation you share across channels. Checkpoints: physical address, opt-out link, truthful subject lines, and honoring unsubscribes within 10 business days.

- ADA/WCAG accessibility: alt text, captions/transcripts, descriptive links, and color contrast. Checkpoints: automated accessibility scan plus a human skim.

- Google’s spam/auto-generated content rules: publish helpful, original content; avoid mass doorway pages, keyword stuffing, and scraped duplication. Checkpoints: originality scan, intent coverage, and internal links mapped to actual user needs. Reference: Google Search Essentials spam policies.

- Platform terms (X, LinkedIn, Pinterest, Meta): rate limits, automation disclosures, anti-spam rules. Checkpoints: posting cadence caps, no duplicate blasts, and platform-specific ad disclosures.

If this sounds like a lot, it is. But it’s still easier than explaining to your CMO why your blog vanished from search faster than a bad tweet.

Ethical principles: accuracy, transparency, and bias mitigation

This is where we keep the humans happy and the brand human.

Accuracy: AI is fast, not omniscient. Use source links, quick human checks, and versioned edits. Treat fact-checking like seatbelts—boring until you need it.

Transparency: If AI contributed, say so. A light touch is fine. Example disclosure options:

- “This article was researched and drafted with AI assistance and reviewed by [Editor Name], [Title].”

- “AI-assisted content: human-edited for accuracy and clarity.”

Bias mitigation: Run a simple bias pass before publishing. You’re not conducting a PhD study; you’re making sure the copy doesn’t punch down or stereotype.

- Bias checklist: neutral descriptors, inclusive imagery, no assumptions about gender/age/ability, and offers that don’t exclude protected groups.

- Counterfactual test: swap demographics in examples—does the tone stay respectful?

- Traceability: save the prompt and model version so you can explain how the sausage got made.

Also skip manipulative tricks: fake timers, invisible disclaimers, or SEO gibberish. If a tactic would annoy your best friend at brunch, it’ll annoy your audience too.

Privacy and data handling guardrails for automated content

Rule one: do not feed the model raw customer data. If you wouldn’t paste it into a public Slack, don’t jam it in a prompt. Yes, even if the prompt looks lonely.

- PII hygiene: never include names, emails, addresses, order IDs, or exact GPS. Use tokens like “Customer_A” and aggregate stats (e.g., “32% of repeat buyers”).

- Anonymization: strip identifiers; use hashing/pseudonymization; for analytics, consider k-anonymity or differential privacy.

- Consent logging: store timestamp, scope (marketing vs analytics), region, and version of the consent text. Make revocation one click.

- Secure storage: TLS 1.2+ in transit, AES-256 at rest, least-privilege access, key rotation, and audited logs. Managed secrets (AWS KMS or Vault) beat sticky notes every time.

- UTM privacy: never stuff personal data in UTMs. Use campaign slugs, not emails. Audit your link builder and ensure referrers don’t leak sensitive query strings.

- Retention limits: define and enforce deletion windows (e.g., prompts/logs 90 days, analytics 13 months). If you keep everything forever, so will your regulators.

- CCPA opt-out: add a “Do Not Sell or Share My Personal Information” link, honor Global Privacy Control signals, and document your response SLA.

Boring? Maybe. But so is not paying fines.

Safe automation architecture and 1-click publishing controls

Automated publishing is awesome until it isn’t. Build your setup like a house with locks, smoke alarms, and an extinguisher that actually sprays something other than hope.

- Staging by default: generate to a staging site first. Only approved items graduate to production.

- Approval gates: require human sign-off for high-risk categories (health, finance, legal claims, kids). Use roles and time-bound permissions for 1-click publish.

- Confidence thresholds: block publish if toxicity > 0.02, reading clarity score < 60, fact-check fails > 1 major claim, or originality similarity > 20% to any live URL.

- Rate limits: cap to, say, 5 posts/day per language and stagger social distribution to avoid spam flags.

- Automatic rollbacks: versioned posts with one-click rollback, plus unpublish API endpoints.

- Kill switch: a big red button that pauses all automation if your alerts start sounding like techno.

- Security basics: MFA, SSO, strict RBAC, API key rotation, WAF rules, and publish queues to stop runaway loops.

How this looks with Trafficontent: you feed brand inputs (tone, product feed, internal link map), Trafficontent drafts multilingual posts with UTM tracking and social cards, you review in staging, run automated checks, then schedule social distribution. Insert manual review where claims, translations, and accessibility matter most. I’ve seen teams cut review time in half with this flow without a single “oops we promised a lifetime warranty in German by accident.”

True story: I worked with a midsize Shopify apparel brand using Trafficontent. We added a PII/trademark pre-check, auto-UTMs, Open Graph previews, mandatory editor sign-off for claims, and consent prompts for EU users. Review time dropped dramatically, exceptions nearly vanished, and organic growth steadied. Automation is your energetic intern—fantastic, but supervised.

SEO and content-quality checks to automate pre-publish

Search doesn’t hate AI; it hates junk. Bake these checks into your pipeline so “publish” doesn’t mean “pray.”

- Metadata completeness: title 50–60 chars, meta description 120–155, one H1, logical H2/H3s.

- Canonical and indexing: one canonical per URL, noindex for thin/localized duplicates you’re not ready to rank.

- Structured data: Article and FAQ schema where relevant; validate with Rich Results Test. Add Open Graph and Twitter Card tags.

- Images: descriptive alt text, compressed, next-gen formats if possible, and lazy-loading.

- Internal linking: 3–5 relevant internal links to cornerstone pages; avoid orphaned URLs.

- External sources: 1–3 reputable references where claims need grounding.

- Originality/duplication: plagiarism scan; similarity threshold under 20% with any live URL on your domain; block if higher unless justified (e.g., legal notices).

- Thin content detection: ensure intent coverage; for informational posts, aim for entity coverage and at least 600+ meaningful words (quality over count).

- Performance: LCP under 2.5s on mobile, CLS under 0.1 for the article template.

- Auto-integrations: update sitemap, ping Search Console, validate 200 status and canonical, and check for 404s in links.

Set a pass bar (e.g., QA score ≥ 95%) and block publishes that don’t make the cut. It’s not harsh; it’s housekeeping—with revenue.

Monitoring, audit trails, and incident response for published content

Think of your AI content like a self-driving car: mostly smooth, but you still want a dashcam. We watch so small glitches don’t grow into big screenshots.

- Monitor: rankings, CTR, bounce/engagement, crawl/indexation, DMCA or policy flags, accessibility regressions, and social sentiment. Tools like Google Search Console for search health, ContentKing for real-time change tracking, and social listening for spikes.

- Audit trails: who approved, prompt history, model version, timestamps, and diffs. WordPress activity logs and Shopify app logs help; export to a tamper-evident store.

- Alerting: anomalies in publish rate, traffic cliffs, or unusual referral spikes trigger alerts to Slack/Teams.

Incident playbook (keep it visible):

- Alert triage in 15–30 minutes: severity and scope.

- Quarantine in 15 minutes: unpublish or add noindex; pause related campaigns.

- Human review within 60 minutes: verify facts, claims, and policy hits.

- Fix or rollback by 2 hours: correct content, add disclosures, or revert.

- Comms: notify stakeholders; if public impact, post a clear remediation note.

- Postmortem in 48 hours: root cause, guardrail update, and test.

If you’re writing postmortems every week, congrats, you’re agile; now add fewer fires to your backlog.

Verification tools, tests, and pre-launch checklists

Your tool stack is the skeptical intern who never sleeps (and never drinks your oat milk).

- Facts: Google Fact Check Explorer, ClaimBuster, or Factmata for claim sniff tests.

- Plagiarism: Copyscape or similar.

- Accessibility: WAVE and Lighthouse checks for WCAG basics.

- Bias: lightweight passes using prompt-swaps and keyword screens; log results.

- API/log audits: confirm UTM parameters, consent tags, and publish endpoints did what you think they did.

- Preview everything: Rich Results Test for schema, Open Graph/Twitter card previews, mobile rendering, link checks, and timezone validation for scheduled posts.

Pre-publish gate (keep it compact):

- Metadata and schema validated

- Facts cited and plagiarism clear

- Alt text and accessibility pass

- Privacy check: no PII in prompts, UTMs safe, consent honored

- Disclosure present (if affiliate/AI-assisted)

- QA score ≥ 95% and human sign-off where required

If it passes, publish. If it doesn’t, fix it. Then, yes—grab coffee.

Governance, roles, and living documentation

Automation without governance is a group project where everyone assumes someone else brought the slides. Make it explicit.

- Roles:

- Content owner: strategy, final editorial quality

- Legal reviewer: disclosures, claims, data use

- SEO lead: technical quality, schema, internal links

- Automation engineer: pipelines, alerts, rollbacks - RACI: one accountable owner per publish. No mystery heroes.

- Versioned policy doc: store in Notion/Confluence, change-log every update, and link the latest version in your pipelines.

- Escalation tree: severity 1 (compliance) routes to legal in minutes; severity 2 (factual) to content lead; severity 3 (style) to editor queue.

- Training cadence: quarterly refreshers; new features require a mini runbook update.

- Metrics that prove guardrails work: reduced takedowns/DMCA notices, stable or rising organic traffic, fewer user complaints, and faster MTTR on incidents.

Target SLOs I like: under 1% of AI-assisted content requires rollback, incidents resolved in under 2 hours, and monthly audits show ≥ 98% compliance on disclosures/accessibility. If you’re hitting those, keep scaling. If not, tighten the gates before adding more fuel.

Useful next step: Pick one high-impact safeguard to install this week—staging-first with a 95% QA gate, or a mandatory claims sign-off for health/finance. Small guardrails compound faster than big regrets.

References:

- FTC Endorsement Guides: https://www.ftc.gov/business-guidance/resources/ftcs-endorsement-guides-what-people-are-asking

- Google Search Essentials (Spam Policies): https://developers.google.com/search/docs/essentials/spam-policies

- EU GDPR overview: https://commission.europa.eu/law/law-topic/data-protection/data-protection-eu_en