If you’ve been pouring cash into ads and watching the ROI trickle like a leaky faucet, let's do something smarter: tune the technical SEO under your WordPress hood. I’ve helped small businesses and stores flip slow, messy sites into lean, discoverable machines. The result isn’t sleight-of-hand — it’s measurable organic traffic and conversions that compound while your ad budget rests. ⏱️ 11-min read

This guide walks through the three pillars that deliver quicker, sustainable returns: speed (Core Web Vitals), site structure (navigation & internal linking), and crawlability (robots, sitemaps, indexing). Expect practical steps, real mini-cases, and a 30–60–90 day plan you can start this week — no developer PhD required, but you will get your hands a bit dirty. Think of this as tuning your car before you pour fuel down the engine.

Speed is king: mastering Core Web Vitals for WordPress

Core Web Vitals measure what your visitors actually feel: how fast the main content appears (LCP), whether the layout jumps around like a caffeinated squirrel (CLS), and how quickly the page becomes interactive (now measured with metrics replacing FID, but still about interactivity). These aren’t vanity metrics — they affect rankings and revenue because users bounce when pages feel slow or glitchy.

On WordPress, problems typically pile up from hosting, themes, plugins, oversized images, and font-loading shenanigans. Start with diagnostics: run PageSpeed Insights or Web.dev to see LCP/CLS hotspots. Then prioritize high-ROI fixes:

- Improve hosting — a responsive host with fast PHP and HTTP/2 makes a big baseline difference. Cheap shared hosting is like renting a desk in a very loud coffee shop.

- Compress and modernize images — WebP or AVIF, right size for the device, and serve through a CDN.

- Eliminate render-blocking CSS/JS — defer non-critical scripts and inline only the critical CSS for above-the-fold content.

- Reserve space for dynamic elements — define width/height to prevent CLS; don’t let widgets surprise the layout mid-load.

- Lazy-load offscreen images and iframes so the browser prioritizes visible content.

I always batch fixes: pick the few offenders that move the needle (hero images, fonts, heavy plugins), fix them in a sprint, then re-test. The goal is faster pages and happier users — not chasing a perfect score like a raccoon after a shiny object.

WordPress speed optimizations: plugins, code, and assets

If your site were a kitchen, plugins would be the appliances. Useful, until every counter is full and you can’t make toast. I start every audit by pruning: disable plugins one at a time on staging and run Lighthouse to find the milliseconds that hide behind plugin bloat. Some plugins add essential features; others add weight and conflicts.

Key tactics that actually move the needle:

- Choose a lean theme — heavy multipurpose themes are feature-rich and performance-poor. A lean theme with hooks lets you add only the features you need.

- Minify, defer, and combine CSS/JS — use WP Rocket, Autoptimize, or similar, but test carefully. Combining scripts can break things; defer non-essential JS and inline critical CSS.

- Use a CDN — Cloudflare, BunnyCDN, or KeyCDN reduce latency for distant visitors. Turn on caching at the edge and purge on deploys.

- Profile plugins — Query Monitor, New Relic, or simple Lighthouse runs help identify slow plugins. Replace or remove offenders rather than layering more patches on top.

- Font strategies — preload critical fonts, use font-display: swap, and subset where possible to avoid blocking text rendering.

Images deserve their own love letter: compress losslessly, use responsive srcset, and enable lazy loading. For production, I’ve seen a site’s LCP drop by half after moving images to WebP and trimming a few heavy plugins. Remember: one small change can crash a layout faster than a questionable haircut, so always test on staging and have rollbacks ready.

Crawlability fundamentals: robots.txt, sitemaps, and URL hygiene

Think of crawlability like building clear highways for search engines. Robots.txt is the doorman — you want it to block backstage routes like /wp-admin/ while inviting crawlers to the main stage with a sitemap. A safe baseline robots.txt is short and simple:

User-agent: * Disallow: /wp-admin/ Allow: /wp-admin/admin-ajax.php Sitemap: https://example.com/wp-sitemap.xml

Important checks I always run:

- Verify the sitemap URL isn’t blocked by robots.txt and submit it to Google Search Console. Plugins like Yoast or Rank Math generate sitemaps automatically.

- Ensure canonicalization is consistent — every page should declare the preferred URL using rel=canonical to avoid duplicate content fights.

- Decide how to handle URL parameters — for filters, sorts, or campaign UTMs, canonicalize to the main page and use GSC parameter handling if needed.

- Find and fix crawl traps — infinite calendar pages, faceted navigation that generates thousands of near-duplicate URLs, or poorly implemented search result pages.

Keep sitemaps tidy and representative: if you add or remove hundreds of products, ensure lastmod timestamps reflect real updates so crawlers prioritize fresh, important content. If you ignore crawl hygiene, Google will snack on low-value pages while your money pages sit in the waiting room sipping stale coffee.

For reference on best practices, see Google’s documentation on sitemaps and robots: Google Search Central: robots.txt

Site structure and internal linking for faster indexing

Structure is the map that both users and crawlers follow. A logical hierarchy and purposeful internal links get your best pages indexed faster and push authority to the content that matters. I recommend thinking like a librarian who hates chaos: flat, organized, and labeled clearly.

Practical rules I use:

- Keep important pages within three clicks from the homepage — deep nesting hides pages and slows crawling.

- Use top-level categories for broad topics and reserve subpages for real subtopics. Avoid category overlap where the same post appears under many categories.

- Build hub-and-spoke clusters: pillar pages link to supporting articles and vice versa. This concentrates link equity and helps search engines see topical relevance.

- Audit for orphan pages — any page with zero internal links is invisible to crawlers unless the sitemap rescues it. Add links from related posts, category hubs, or site-wide sections.

- Implement breadcrumbs to expose hierarchy to users and search engines. Breadcrumbs reduce clicks and clarify context.

Anchor text matters — use descriptive, natural phrases rather than “click here” or random keywords. In one cleanup I trimmed categories, consolidated similar posts, and added hub links; indexing speed improved and more pages surfaced in search within days. Structure isn’t sexy, but it’s reliable — like paying taxes, but with better results and less paperwork.

Structured data and schema on WordPress

Schema is shorthand for telling search engines what your content actually is — think "Article," "FAQ," "Product," or "HowTo." It can win you rich results, better CTRs, and clearer signals to crawlers. Implemented sensibly, schema is like putting name tags on your content at a networking event: people (and bots) find what they need faster.

Where to begin:

- Start with Organization schema on the homepage (name, logo, contact). Add Article schema for blog posts to expose headline, image, author, and publish date.

- Use FAQPage for Q&A sections and HowTo for step-by-step guides. You can layer types — a single post can be both an Article and a HowTo if relevant.

- Plugins (All in One SEO, Rank Math, Schema Pro) automate many fields correctly and are safer for most sites than manual tagging.

- If you prefer full control, inject JSON-LD snippets via header/footer scripts or within the post template. Test changes using Google’s Rich Results Test and the Schema.org validator.

Maintain and validate regularly — broken or inaccurate schema is worse than none, because it confuses crawlers and can trigger manual actions or loss of rich features. If you use Trafficontent, it can generate FAQ schema and optimized metadata as part of the publishing workflow, which is handy when you scale content without turning into a schema hermit.

For validation guidance, see Google’s rich results testing tool: Rich Results Test

Indexing controls and crawl budget management

Indexing controls are the throttle knobs for what search engines should and shouldn’t spend time on. Most small sites don't need complex crawl budget gymnastics, but as you grow — especially e-commerce sites — tell search engines where to focus. Otherwise Google will play tourist through tag archives while your product pages queue up like impatient shoppers.

Practical steps I apply:

- Use noindex for low-value pages: paginated tag archives, staging copies, author pages for single-author blogs, or printer-friendly templates. This prevents index bloat.

- Canonical tags — always set a canonical URL for pages with similar content. WordPress and SEO plugins usually handle this, but verify for parameterized pages.

- Manage sitemaps frequency — ensure lastmod updates when content changes. For rapid content churn, submit sitemaps more often in Google Search Console.

- Tune crawl rate in GSC only if necessary — lowering crawl rate can stop over-crawling on fragile servers; raising it is rarely needed for small sites.

- Use X-Robots-Tag headers for non-HTML resources when needed (e.g., avoid indexing duplicate PDFs or auto-generated feeds).

I once set noindex on hundreds of thin tag pages and redirected internal links to category hubs. Within a week, Google shifted crawl patterns and focused on the money pages — indexing improved and server load dropped. In short: make search engines work smarter, not harder.

Measurement, dashboards, and ongoing audits

If you don’t measure, you’re guessing — and guessing is expensive. I build small, focused dashboards that track Core Web Vitals, crawl stats, and index coverage so regressions are obvious. Keep it simple and actionable: see a spike in CLS? You know where to look. See a drop in crawl rate? Check deployments and robots rules.

Core metrics to monitor:

- LCP — target under 2.5s (mobile and desktop). Track via Google Search Console and PageSpeed Insights.

- CLS — aim for under 0.1. Watch for spikes after adding ad scripts or widgets.

- Crawl stats — use GSC’s Crawl Stats report to see fetch frequency and any errors.

- Index coverage — monitor which URLs are indexed, excluded, or flagged with errors and fix 404s, redirect chains, and canonical problems.

I run monthly audits with Lighthouse, PageSpeed Insights, and a crawling tool (Screaming Frog or Sitebulb). Automate exports into a dashboard (Data Studio or a simple spreadsheet) so stakeholders can see progress. The goal: quick detection, quick rollback, and a repeating optimization loop — like flossing, but for your site’s health.

Useful tools: Lighthouse, PageSpeed Insights.

Content-first SEO as ROI amplifier: faster returns than ad spend

Technical fixes set the stage; content delivers the audience. When you publish content that matches user intent and your site is fast and crawlable, organic traffic compounds far faster than continuously increasing ad spend. Content-first means publishing with a structure and tech readiness that helps pages rank quickly and convert.

How I align content and tech for quick ROI:

- Topic clusters: create pillar pages and supporting posts so search engines understand topical authority. This multiplies ranking opportunities without multiplying ad spend.

- Pre-publish checklist: canonical tags, clean URLs, schema (FAQ if applicable), optimized images, and lazy loading all in place before hitting publish.

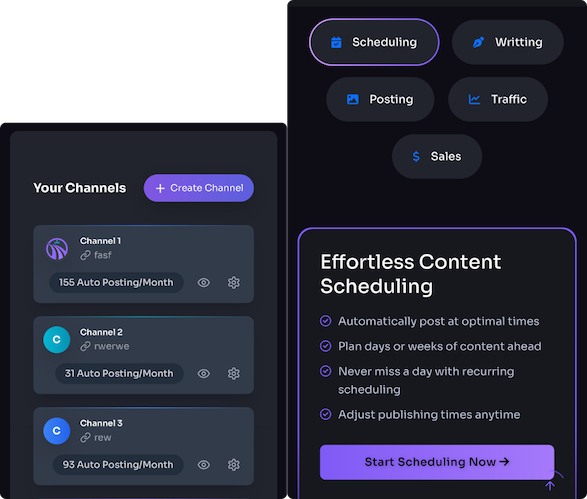

- Automate distribution: tools like Trafficontent can generate SEO-optimized posts, produce FAQ schema, and schedule shareable assets across channels — saving time and improving reach while maintaining SEO best practices.

- Evergreen focus: aim for content that keeps returning value. A few well-optimized evergreen pages often outperform dozens of fleeting ad campaigns.

I’ve seen teams cut ad spend while growing organic clicks by aligning faster pages with a content plan. One store used Trafficontent to spin up optimized product posts and auto-share them; traffic rose and ad dependency dropped. In short: treat content as the engine, technical SEO as fuel, and distribution as the steering wheel.

30-60-90 day plan: implementation without the ad spend

Here’s a pragmatic 90-day roadmap so you can start delivering ROI before you consider throwing more money at ads. I split responsibilities into small, testable sprints so you don’t break the site or your team’s morale.

- Days 0–30 (Audit & quick wins)

- Run Lighthouse/PageSpeed, Web.dev, and a crawl (Screaming Frog). Build a short list of top LCP/CLS offenders.

- Swap heavy images to WebP/AVIF, enable lazy loading, configure CDN, and implement caching plugin (WP Rocket or similar).

- Prune top 5 non-essential plugins on staging and measure impact.

- Submit sitemap and verify robots.txt; fix any accidental blocks.

- Days 31–60 (Structure & schema)

- Flatten navigation where needed, consolidate categories, fix orphan pages, and add breadcrumbs.

- Implement core schema (Organization, Article, FAQ) via plugin or JSON-LD snippets and validate with Rich Results Test.

- Set up dashboards for Core Web Vitals and index coverage; baseline metrics.

- Days 61–90 (Crawl budget & content rollout)

- Apply noindex to low-value pages, refine canonical rules, and update sitemap lastmods.

- Publish a cluster of 4–6 SEO-optimized posts aligned with pillar content. Ensure all pre-publish checks pass (URLs, schema, images).

- Monitor crawl stats and indexing; iterate on any remaining technical debt. Measure organic clicks vs. prior ad-driven traffic.

Assign tasks to an internal owner or a freelancer: hosting & CDN (tech), plugin audit (dev), content & schema (editor), and measurement (analytics). Re-test Core Web Vitals after each milestone and celebrate small wins — they compound faster than you think (and taste better than recycled ad spend).

Next step: pick one high-traffic page, run a PageSpeed audit, and apply the three fastest changes you can (image swap, caching, and defer JS). You’ll see real impact within days — and you’ll have a story to tell at your next coffee break.

References: Core Web Vitals (web.dev), Google Search Central: robots.txt, Lighthouse