Automation is no longer a convenience — for modern ecommerce teams it’s a strategic lever. When you connect Trafficontent (or a similar automation platform) to WordPress and Shopify, you don’t just save editorial time: you change how search engines see your site. Faster publishes, predictable signals, and cleaner crawl paths all affect indexation, rankings, and how quickly new SKUs reach buyers. ⏱️ 11-min read

This guide walks through the SEO mechanics of automated WordPress publishing and offers practical setup and measurement guidance you can apply today. Expect concrete examples, a full idea→index pipeline, and rollout advice tailored to store owners, content leads, and cross-platform teams balancing Shopify product data with WordPress content.

Speed and delivery impacts of automated WordPress publishing

Automation shortens the path from idea to live page by collapsing manual handoffs: templated metadata, automated webhooks, and integrated feeds remove repetitive checks. In older workflows, teams queued content in batches: a product update written at 9:00am might not appear until the next scheduled push at noon. With Trafficontent-style webhooks and publish triggers, approved content can go live within seconds.

That raw publishing speed matters, but the user experience and perceived freshness depend on caching and CDN behavior. Edge caches can make instant publishes visible to returning visitors immediately; conversely, a CDN that still holds an older asset requires an immediate purge call or cache-busting header to show the update. Your automation should call CDN purge endpoints post-publish or include intelligent cache headers so updated product pages reflect price/stock changes right away.

Automation also affects render performance. Templates should minimize heavy block rendering and inline critical CSS to reduce first-contentful-paint for auto-published posts. Image processing — automatic resizing, WebP conversion, and lazy-loading attributes — should run as part of the pipeline so the published page is both live and fast. Finally, reliability matters: queue-based publishing with retry backoffs and dead-letter handling may add a few seconds, but it prevents errors that could produce 404s or partial pages that harm SEO.

Indexing signals and content freshness

Search engines reward reliable freshness signals. Automated workflows make those signals predictable: if your sitemap updates on every publish and your feeds ping search engines, crawlers learn a cadence and will return more often. For ecommerce sites, small updates — price changes, stock adjustments, new reviews — are powerful freshness nudges when driven by automation.

But automation can accidentally create noise. When content variations are generated automatically (color swatches, region variants, or language copies), canonical tags and hreflang must be precise to avoid duplicate content. Standardize canonical rules in your templates so every variant points back to the preferred URL. For international stores, ensure hreflang annotations are included and maintained by the pipeline so engines index the right locale pages.

Structured data must also stay accurate. Product schema fields for price, availability, and aggregateRating are frequently used by search engines for rich results. If your automation pulls price or review data from feeds, make sure those feeds are normalized and timestamped; automated pre-publish checks should validate that prices are within expected ranges and that rating counts are integers to avoid malformed JSON-LD. Finally, use pings or XML sitemap updates on publish. When your SEO plugin or Trafficontent updates the sitemap and immediately notifies Google via the ping endpoint, new pages are discoverable much sooner.

Crawl efficiency for large WordPress catalogs

Scale changes the calculus. A 5,000-SKU catalog behaves very differently than a 50-product shop: crawl budget becomes a constraint and careless automation can waste it. Partitioning is essential. Segment sitemaps by category, brand, or feed and publish a sitemap index that points to these smaller files — crawlers prefer targeted sitemaps to one monolithic file.

Architectural clarity accelerates crawl efficiency. Adopt a hub-and-spoke internal linking model: category hubs link to product pages with descriptive anchor text, and breadcrumb schema points back to the category. This helps crawlers prioritize entry points and reach deeper pages without chasing parameterized or duplicate URLs. Use robots.txt and noindex rules to exclude low-value pages: tag archives, author pages, staging copies, and parameterized filters frequently add noise.

Automation should be crawl-aware. Instead of re-publishing thousands of SKUs in one batch (which can trigger a "burst crawl" that strains your server and wastes budget), stagger updates. Schedule smaller groups or use randomized publish intervals within a defined window so bots receive paced signals. For massive catalogs, consider a priority field in your sitemap entries — lastmod timestamps help crawlers identify which URLs changed and deserve earlier attention.

SEO workflow for WordPress stores in 2025

By 2025, SEO for ecommerce is a hybrid of automation, AI, and governance. Use AI-assisted keyword generation to scale long-tail discovery for product pages and blog content. Trafficontent’s keyword tools or a WordPress SEO keywords generator can seed topic ideas: combine high-intent product keywords with informational long-tail phrases to capture both purchase and research queries.

Standardize templates and employ best-in-class plugins like Yoast or Rank Math to enforce on-page SEO fields (title tags, meta descriptions, canonical links). Templates should be versioned: if a template produces a drop in CTR or rankings, version control (Git for templates or the plugin’s rollback) lets you revert quickly. Use automated briefs and topic clustering tools to keep content focused. AI can create an initial draft and a SEO-friendly meta block, but route drafts through a human editor for nuance, brand voice, and to catch edge cases where AI hallucinations could produce inaccurate product claims.

Performance-first SEO remains critical. Automate image compression, critical CSS injection, and resource minification in your build pipeline. Leverage a CDN with smart cache rules and ensure that your publish workflow triggers sitemap regeneration and CDN purges. Finally, integrate inventory and review feeds so pages reflect current stock and social proof; stale stock or missing reviews can reduce conversions and search relevance.

End-to-end automation architecture: from idea to indexation

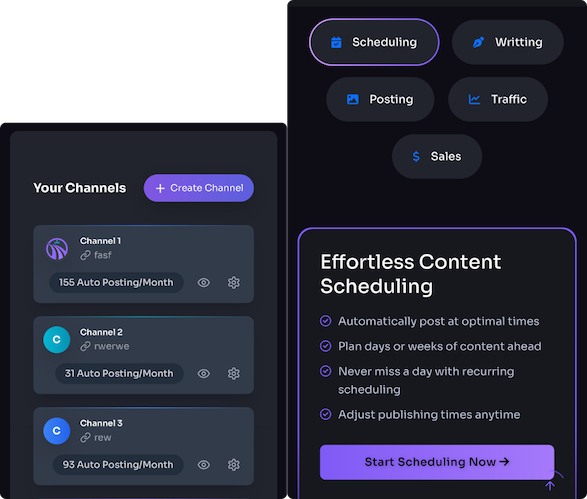

A reliable pipeline transforms a marketing brief into an indexable, performant page. Map the stages: intake → template mapping → draft generation → pre-publish checks → publish triggering → sitemap and cache updates → distribution. Trafficontent-style systems usually accept structured briefs with keyword targets, content type, product SKUs, and rights metadata. These briefs are validated and linked to a reusable template.

During draft creation, the system enriches content with media from feeds (hero images, thumbnails), auto-generates meta tags and Open Graph data, and builds JSON-LD for Article or Product schema. Pre-publish checks are critical: automated tests should verify target keywords exist in H1/H2, meta descriptions fit length limits, images have alt text, and internal links don’t 404. Licensing and entitlements — for example, promotional pricing windows — are enforced here to prevent accidental live discounts.

On publish, triggers should do several things in sequence: write the post to WordPress, regenerate the related sitemap file, call the search engine ping endpoint, and purge/update CDN caches. If you cross-post to Shopify (for example, a WordPress blog post that references Shopify product pages), the automation should update Shopify’s analytics tags or create a mapping record so analytics attribution is consistent. Build rollback hooks — if an error is detected by a post-publish audit (broken microdata, missing canonical), the workflow should either unpublish or flag the content for immediate human review.

Step-by-step implementation guide

Turn theory into action with a staged implementation plan that balances speed and risk. Below is a practical checklist you can follow that aligns Trafficontent triggers with WordPress and Shopify needs.

- Inventory & templates: Catalog content types (product pages, category hubs, blog posts) and assign a default template for each. Define required SEO fields — title, meta description, canonical, schema.

- Pick tools & integrations: Choose Trafficontent or a similar platform that supports webhooks, CDN purge APIs, and sitemap updates. Install a trusted WordPress SEO plugin and confirm compatibility.

- Build feeds: Create normalized JSON/XML feeds for inventory, reviews, and assets. Map fields like SKU, price, stock, and rating to schema properties.

- Automate pre-publish checks: Implement tests for meta lengths, header structure, image alt text, and JSON-LD validity. Include price and stock sanity checks to catch data-feed anomalies.

- Publish flow: Configure webhook triggers to write to WordPress, regenerate sitemaps, ping search engines, and purge CDN caches. Add retry logic to handle transient failures.

- Analytics mapping: Create a mapping table that links WordPress posts to Shopify pages and ensures consistent UTM and canonical configurations.

- Monitoring & alerts: Wire Google Search Console, GA4, server logs, and Trafficontent dashboards into a single reporting view with alerts for indexation drops, crawl errors, or speed regressions.

Run these steps in a test environment first. Version templates in Git, keep a changelog for schema mappings, and schedule nightly backups of both content and configuration.

Measuring SEO impact across WordPress and Shopify

Proof of impact requires aligned metrics. Before rolling out automation, record baselines for indexation rate, average time-to-index, crawl errors, organic sessions, and conversion rate. Use Google Search Console to monitor crawl frequency and index coverage, GA4 (or your analytics platform) for organic traffic and behavior, and server logs to see bot activity in real time.

Important KPIs to track post-deployment:

- Indexation velocity: percentage of new pages indexed within 24–72 hours

- Crawl errors: 404s and server errors correlated with publish events

- Page speed: field and lab metrics for pages created by automation

- Organic engagement: impressions, clicks, CTR, and session duration for new content

- Conversion metrics: add-to-cart and revenue for pages tied to product SKUs

Run representative experiments: roll automation out to 5–10% of the catalog across varied categories and price points as a test cohort. Use control and variant groups for metadata changes (title variants, schema enrichments) and run the test for a fixed period, measuring impressions, clicks, and conversions. This incremental approach helps isolate positive lifts and avoid sweeping regressions.

Practical rollout and risk management

Risk is manageable with discipline. Start with a small pilot — 5–10 product pages or a single category. Define explicit go/no-go criteria: a target percentage of pages indexed within 24-48 hours, no uptick in crawl errors, and stable referral traffic. If targets are missed, pause expansion and diagnose.

Version control and rollback plans are non-negotiable. Keep templates, sitemaps, and workflow code in Git with tagged releases. Use feature branches for significant automation changes and require peer reviews before merging. Pre-publish checks should include a "dry run" mode that writes to a staging site so you can validate how crawlers would see the content without exposing it to users.

Monitoring should be real-time and tied to automated alerts. Create dashboards that show impressions, clicks, index coverage, and server error rates segmented by pilot vs. control groups. Set Slack or email alerts for thresholds like a 10% rise in 5xx errors or a 15% drop in indexed pages. Preserve redundancy by keeping nightly backups and retaining a copy of sitemaps and template versions in staging. If an indexing anomaly appears — sudden delisting or widespread duplicate content — you should be able to unpublish the affected set and revert the template within minutes.

Finally, govern data feeds. Many issues arise from inconsistent feed fields: different field names for price across vendors or missing rating counts can break structured data. Implement feed validation rules and a quarantine process that prevents malformed feed entries from publishing until corrected.

Case study: a mid-market WordPress storefront

Consider a mid-market store with roughly 5,000 SKUs that moved from manual updates to an automated pipeline using Trafficontent-style workflows. The team’s goals were clear: reduce time-to-publish, keep product data fresh, and make new SKUs discoverable faster.

Implementation highlights included templated product pages with consistent schema, automated metadata pulled from normalized feeds, and immediate sitemap updates with ping notifications to search engines. Instead of full-batch republishing, the pipeline staggered updates by category and invoked CDN purges for the modified assets. Pre-publish checks validated price ranges and review counts and prevented out-of-window promotions from going live.

The results were measurable: average indexing time dropped by about 40% and organic traffic increased roughly 25% within three months for newly published items. New products began receiving impressions and clicks much sooner, which improved discoverability for long-tail queries. Challenges emerged from inconsistent field names across feeds; canonicalization and feed-level validation handled this by mapping variant fields to canonical schema properties. The takeaways were straightforward: governance, template versioning, and a staged rollout were essential to sustain gains and prevent surprises.

Next step: pick one small category, map its template, and run a week-long pilot with Trafficontent’s auto-publish feature. Track indexation velocity, server load, and user engagement and iterate from there.